DistributedDataParallel non-floating point dtype parameter with

🐛 Bug Using DistributedDataParallel on a model that has at-least one non-floating point dtype parameter with requires_grad=False with a WORLD_SIZE <= nGPUs/2 on the machine results in an error "Only Tensors of floating point dtype can re

55.4 [Train.py] Designing the input and the output pipelines - EN - Deep Learning Bible - 4. Object Detection - Eng.

Access Authenticated Using a Token_ModelArts_Model Inference_Deploying an AI Application as a Service_Deploying AI Applications as Real-Time Services_Accessing Real-Time Services_Authentication Mode

Optimizing model performance, Cibin John Joseph

images.contentstack.io/v3/assets/blt71da4c740e00fa

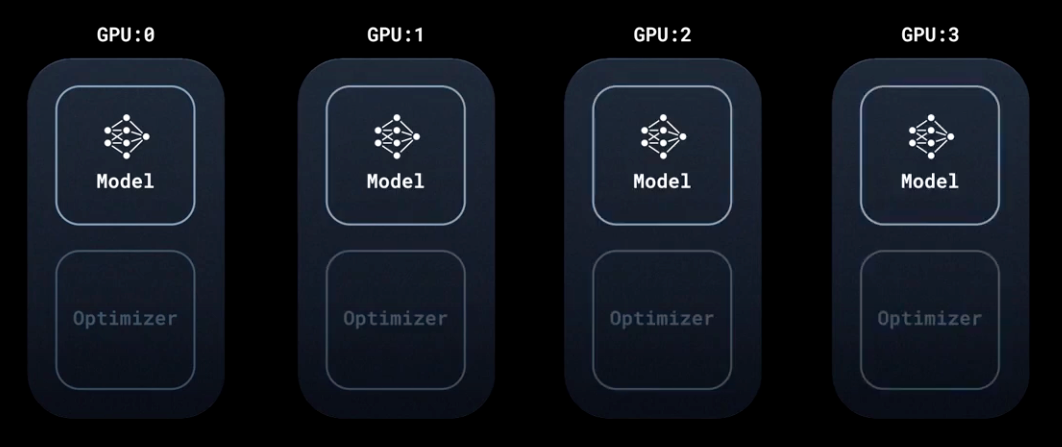

A comprehensive guide of Distributed Data Parallel (DDP), by François Porcher

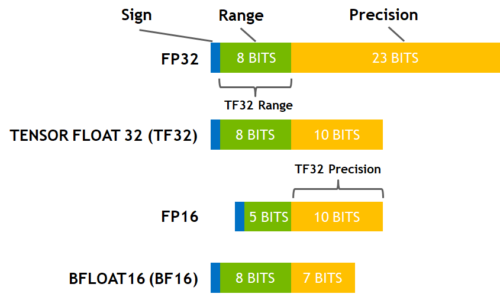

Number formats commonly used for DNN training and inference. Fixed

apex/apex/parallel/distributed.py at master · NVIDIA/apex · GitHub

Parameter Server Distributed RPC example is limited to only one worker. · Issue #780 · pytorch/examples · GitHub

How much GPU memory do I need for training neural nets using CUDA? - Quora

Multi-Node Multi-Card Training Using DistributedDataParallel_ModelArts_Model Development_Distributed Training

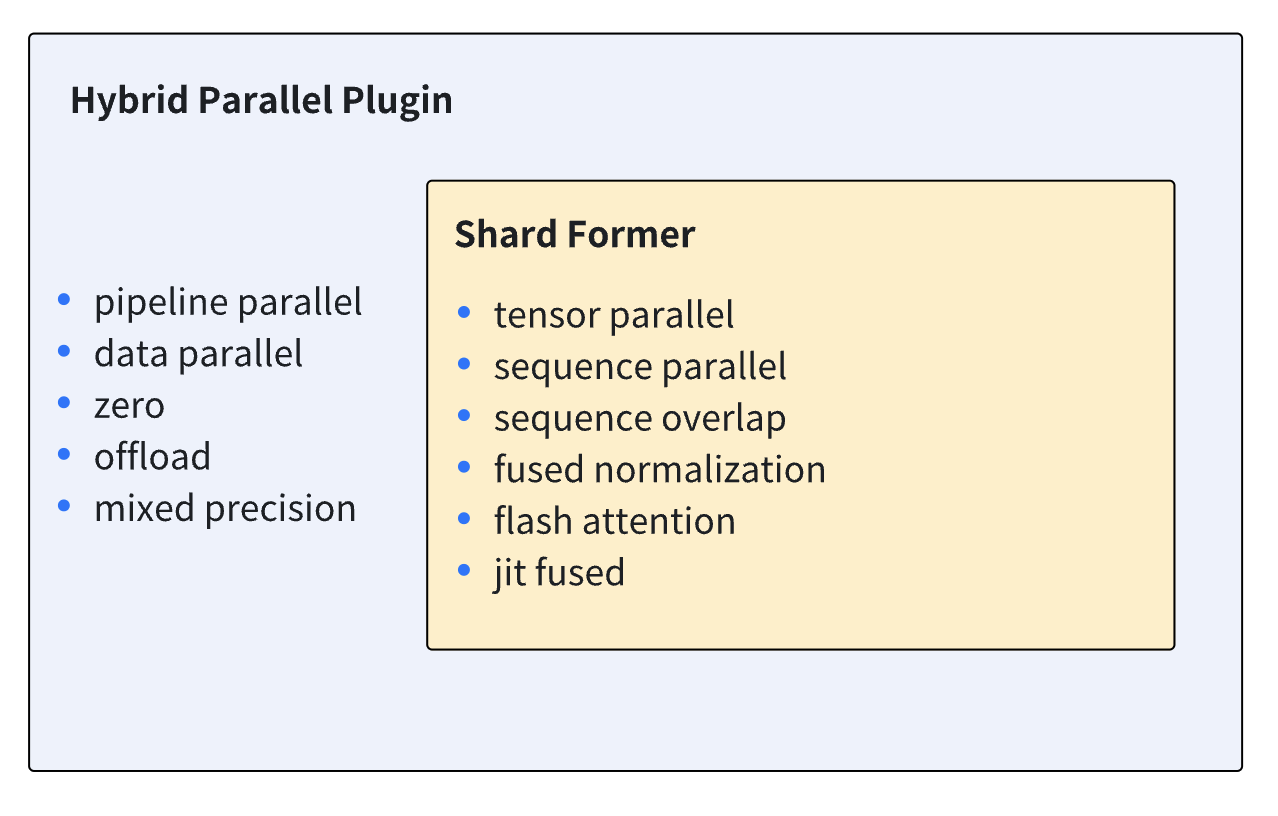

Booster Plugins

Error Message RuntimeError: connect() timed out Displayed in Logs_ModelArts_Troubleshooting_Training Jobs_GPU Issues

Performance and Scalability: How To Fit a Bigger Model and Train It Faster

fairscale/fairscale/nn/data_parallel/sharded_ddp.py at main · facebookresearch/fairscale · GitHub